Visual Inertial System (VINS) with Stereo Vision and GPU Acceleration

Note: This tutorial is applicable for Kerloud SLAM Indoor only.

The Kerloud SLAM Indoor set is equipped with a Nvidia TX2 module and an Intel Realsense D435i stereo camera. With a more powerful GPU core, the hardware set is capable of performing fully autonomous indoor localization with pure vision. The VINS system opens broad opportunities for vision-aided autonomy and other applications like SLAM, AR(Augmented Reality). The product is suitable for high-end users with the need to delve deep into computer vision and robot autonomy.

Background

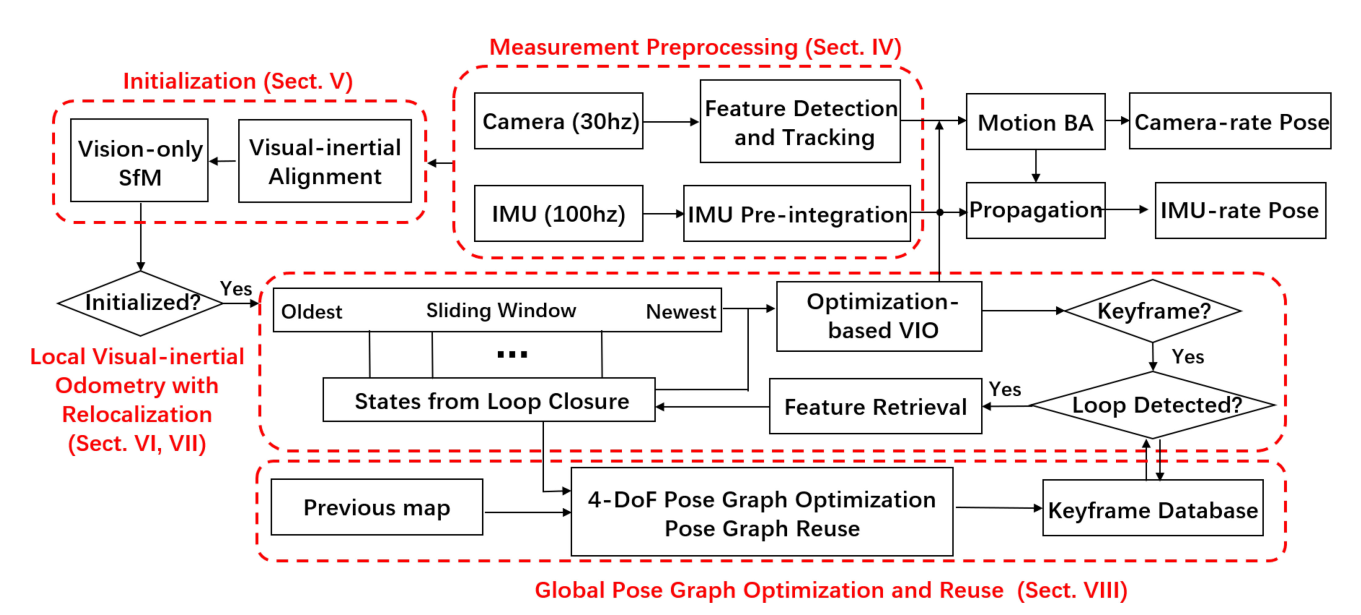

The VINS system is based on the original work from the HKUST Aerial Robotics Group (https://uav.hkust.edu.hk). The related resources can be found in the Reference section of this tutorial. The approach fuses the IMU data seamlessly with onboard computer vision, and can output real-time pose estimation. It provides a complete pipeline from front-end IMU preintegration to backend global optimization, as depicted in the figure below:

What’s Inside

Note that the original open-source code cannot be directly deployed on UAVs, and it requires sophisticated setup before a user can attempt a successful flight. The work we have done includes the following aspects:

Complete environment setup with necessary prerequisites (OpenCV, Intel Realsense libraries, etc)

Camera calibration with both intrinsic and extrinsic parameters

Configurations for VINS fusion with Realsense stereo vision

Integration with the autopilot for low-level state estimation

Conduct dataset simulation, handhold move and autonomous flight tests

How to Use

The workspace for the VINS system located in the directory ~/src/catkin_ws_VINS-Fusion-gpu contains following packages:

VINS-Fusion-gpu: VINS algorithm package with GPU acceleration, customized specifically for Kerloud UAV.

vison_opencv: a package for interfacing ROS with OpenCV.

pose_converter: a package to bridge the odometry system with the autopilot.

The VINS system can be launched with following commands:

# terminal 1

roscore

# terminal 2: launch mavros node

cd ~/src/catkinws_realsense \

&& source devel/setup.bash \

&& roslaunch mavros px4.launch fcu_url:=/dev/ttyPixhawk:921600

# terminal 3: launch realsense driver node

cd ~/src/catkinws_realsense \

&& source devel/setup.bash \

&& roslaunch realsense2_camera rs_d435i_kerloud_stereo_slam.launch

# terminal 4: launch VINS node

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& source devel/setup.bash \

&& rosrun vins vins_node /home/ubuntu/src/catkin_ws_VINS-Fusion-gpu/src/VINS-Fusion-gpu/config/kerloud_tx2_d435i/realsense_stereo_imu_config.yaml

# (Optional) terminal 5: launch vins-loop-fusion node

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& source devel/setup.bash \

&& rosrun loop_fusion loop_fusion_node /home/ubuntu/src/catkin_ws_VINS-Fusion-gpu/src/VINS-Fusion-gpu/config/kerloud_tx2_d435i/realsense_stereo_imu_config.yaml

# terminal 6: launch pose_converter node

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& source devel/setup.bash \

&& roslaunch pose_converter poseconv.launch

# (Optional) terminal 7: launch rviz for visualization

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& source devel/setup.bash \

&& roslaunch vins vins_rviz.launch

or simply:

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& bash run.sh

Demo

Simulation with Dataset

The simulation test with Euroc dataset can be conducted with commands below:

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& source devel/setup.bash \

&& roslaunch vins vins_rviz.launch \

&& rosrun vins vins_node ~/src/catkin_ws_VINS-Fusion-gpu/src/VINS-Fusion/config/euroc/euroc_stereo_imu_config.yaml

# Optional:

cd ~/src/catkin_ws_VINS-Fusion-gpu \

&& source devel/setup.bash \

&& rosrun loop_fusion loop_fusion_node ~/src/catkin_ws_VINS-Fusion-gpu/src/VINS-Fusion/config/euroc/euroc_stereo_imu_config.yaml \

&& rosbag play YOUR_DATASET_FOLDER/MH_01_easy.bag

Ground Test

VINS hand-hold test:

VINS dynamic ground test:

Autonomous Indoor Hovering Flight

References

VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator, Tong Qin, Peiliang Li, Zhenfei Yang, Shaojie Shen, IEEE Transactions on Robotics. 2018.